Introduction

With billions of €, $ and £ being poured into AI the question of profitability arises. How will these companies ever make money? What is the end-game? Will the widespread usage of AI usher in a new age for modern society?

This post is voicing concerns that uncritical and overoptimistic usage of AI will be exploited by private companies to influence our daily lives in unprecedented ways. It examines the current, ongoing, aggressive push of this technology and the privacy and societal issues that come with it. The current trend is then extrapolated to what might happen in the future and how we might be able to avoid it.

When I’m talking about “AI”, I am specifically and only talking about modern-day generative AI products like ChatGPT, Copilot and DeepSeek. I am not talking about general machine learning or even LLMs for that matter. This post is about how private companies are trying to abuse you.

The Calculator

I want to start this post with a little story. Sometimes technology makes us unaware of our shortcomings. This also happened to me.

Fractional Mistakes

When I was in school, we were allowed to use calculators quiet early. I really welcomed that decision, as I wasn’t a good (or even passable) math student. I highly disliked calculating things as Flüchtigkeitsfehler were common in my calculations and my results would never line up with the solution, even when I had the correct idea. There was another reason why I liked calculators. Before getting them, one of the last topics we discussed in class were fractions.

Addition, subtraction, multiplication and division of simple fractions. Child’s play, right? Well, to be honest: I just didn’t get it. For some reason, I couldn’t form a mental model in my head what fractions are supposed to be and how to work with them. Colorful examples with cakes and pizzas didn’t help me. I barely passed the test we had on it, even though I don’t know how that happened.

Machine Over Mind

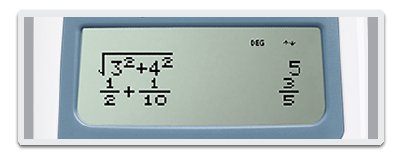

Imagine my delight when I found out that the calculator we got, the TI-30XS, was able to work with fractions out of the box!

Of course, that wasn’t its only feature, but by far the most important to me. I was able to fearlessly work with fractions. Throughout the rest of my school life I did not have to calculate a single fraction by hand. The calculator did it all for me.

Later I upgraded to a Casio fx-9860GII which is one of my favorite devices of all time. Those calculators, over time, sparked my interest in math more and more. Gone was the time, when I couldn’t do simple calculations, now I could do math as much as I wanted, and I know my fractions were always correct.

Blissful Ignorance

When pursuing higher education and going to university to study computer science, calculators were not allowed in exams. People told us that “they wouldn’t be much use anyway” and correct they were. A calculator can’t write a mathematical proof or reason about mathematical objects. It can only calculate.

This wasn’t a big problem for me and I passed my first two math-exams on discrete math and linear algebra. However, in my third semester, it happened. I can still remember this moment. It was in an exercise session for my Calculus class. The teacher up in front wrote down some mathematical term, I believe it was a series convergence problem, which had multiple fractions in them. It even had some unknowns, so this wasn’t trivial. Without comment, they wrote down an equivalent term, that had the fractions all mashed into a single one. I couldn’t believe and much less comprehend what was going on. Looking around the room, nobody seemed to be disturbed by the wizardry that was happening on the whiteboard in front of us. How was this possible? What was even happening? I was thoroughly confused.

Helplessly, I did what any self-respecting student would do, took out my phone, went online and searched how to calculate using fractions. I spent the rest of the exercise session reading up on pages that were clearly designed for school children. Those damn calculators left me in a state of blissful ignorance. I didn’t even know what I didn’t know, unaware of my shortcomings, now left to relearn the most basic of tasks.1

Luckily, the calculator only replaced my ability to do some calculations. It didn’t replace my reasoning or thinking. It didn’t claim to do research for me or tell me about historical facts. The calculator is a simple tool unlike the promises for AI.

To Make a Return

Billions over billions of whatever currencies you can imagine are being shoved into AI products. $14 Billion from Microsoft, $10 Billion from Honor, €200 billion from the EU and lots more to come. It’s a new space race but this time, we are trying to produce the dumbest shit as fast as possible just so a few investors can continue to play ping-pong with their shares. Generative AI has no clear use case, even today. Though Altman is sure that he knows how AGI can be built we still haven’t seen anything but faulty, lying and disturbingly confident Chatbots that are not trained to be factual but convincing.

The people pushing this technology into the workforce rarely care about anything but power and money. They want to con managers into replacing real people with AI not because it would make the world a better place but to enrich themselves. It’s disgusting and sociopathic, and I know I am not the only one who thinks that.

Pump Up the Jam

However, will these companies actually ever make a real return on their investment? How can you make billions from software that objectively doesn’t solve any new problems? There are essentially two possibilities.

Either the technology actually becomes useful and people would actually like to integrate into their lives, or you simply monetize the product more aggressively. Let’s face it, the first option is not going to happen, so how will it be monetized more aggressively? You can’t make people pay even more for the waste of electricity that is AI, so you have to get more users! You need to pump it up!

AI will be forced into every product. Some companies, like Microsoft, are already doing it. Every application, device and service will have some feature that claims to be smarter than you. Windows Recall is a literal screen logger that lets an AI run rampant on everything you do on your computer. Microsoft promises that this data will not leave your computer, but we know that Microsoft lies whenever they can.2 My prediction: A few months after launch there will be an opt-in program that promises you to improve your AI if you share your data and after a few more months it will become opt-out and enabled by default. People will be surveilled in real time by a private company without knowing it.

Other companies are following. Adobe products have AI built-in. Every social media has some “Summarized by AI✨” feature which doesn’t work and only annoys users. This stuff is everywhere, not because it improves anything, but it must be shoved into everybody’s faces. Otherwise, how can anyone claim a return on investment?

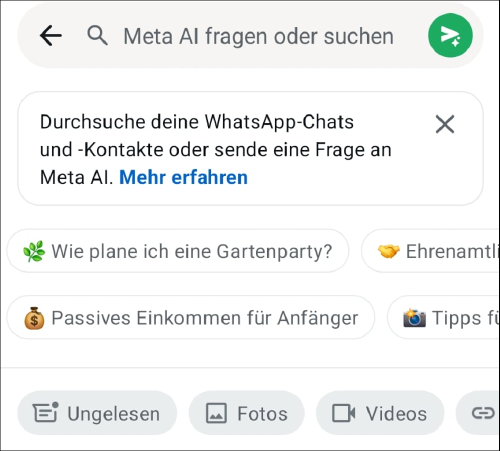

While writing this post, WhatsApp added an AI agent to its UI, which I can’t get rid of. In the screenshot above, it suggests some questions I could ask with “💰 Passive Income for Beginners” instantly standing out. I did not cherry-pick this example. This was really the very first thing that Meta AI suggested to me. How wonderful is it, that sleazy influencers aren’t the only ones that want to pull me into some idiotic passive income grift? AI really is becoming more life-like with every update.

The UI of the app now constantly reminds me, that I should chat with its AI. The search bar connects with it, the button on top of the “new message” button does too. However, I have not been made aware about any privacy policy regarding this. Will my messages be used for training? Will Meta collect the private information I share with their AI?

This is the first step in the aggressive push of AI on us as users. A simple chat app now wants to give me financial advice.3 It wants me to rely on it.

Tapping Into Minds

AI is remarkable in that it can collect private and sensitive data much more elegantly than the usual privacy-invasion we see from big tech. The whole point of AI is that you tell it the things you are working on, interested in and want to know. Everything you are doing and thinking. Everything has to be made known to the AI.

The internet becomes deader every day. More and more content is being produced by bots, much of it powered by LLMs. This is bad news for the companies that scrape the web to train their models and keep them up to date with the latest happenings in the world, as training any machine learning model on its own output will result in degrading performance over time. So, these companies need fresh data. If the internet content, that people produce with the help of AI, is no good, the data needs to come directly from the source. Directly from the brains of the users.

Think about the absolutely insane idea of an AI email assistant. An AI that reads all of your e-mails, summarizes and even answers them for you if you tell it to. Just for a second, think about it. Would you let me read all your e-mail? Those subscription reminder e-mails, those “you have a new message” e-mails from social media and those e-mails from your parents about their health conditions. Would you let me read all of that?

No, you wouldn’t. But at some point, companies like Microsoft will make it mandatory, for an AI to be able to scan everything you do. They can’t legally read your e-mails (Or can they? I am not sure.), but they can claim, that reading all your e-mails with an AI is just a technical necessity to bring you the “best experience”.4

“Just a Tool”

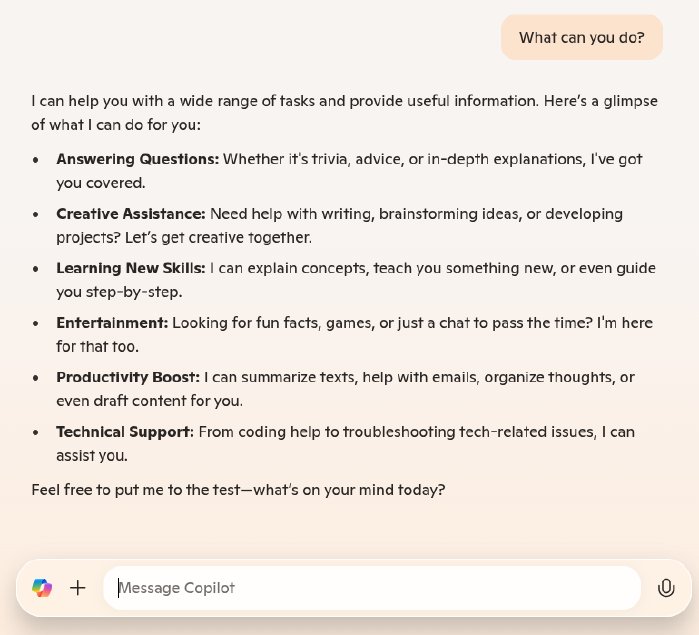

Whenever I tell people about the horrific implications that AI usage brings, I hear the same argument over and over: “It’s just a tool”. I find this argument flawed in many ways. No, AI is not just a tool. To demonstrate this, let me compare it to a calculator, an actual tool. Immediately, we should recognize a few differences:

- Task Specificity: The calculator has a very specific task to do and does not claim to do anything beyond that. The calculator does not advertise any mathematical skills beyond simple calculations. Even when they have a CAS built in, they don’t claim that they can do anything beyond that. This is in stark contrast to AI that advertises itself as being able to all sorts of task that are outside the realm of human language interaction such as logical reasoning.

- Domain Objectivity: The domain of calculators is objectively verifiable. If a calculator were to tell me that 2+2=5, we could make the objective claim that the calculator is defective. While there are some gray areas like floating-point accuracy, even those can be compared between models using objective measurements. AI, however, works in a highly subjective domain. While it is possible to falsify its statements when they are objectively wrong, it is much harder to do so when we talk about tasks like “summarizing”, “translating” or “improving grammar”. The results of these actions are harder to judge.

- Impartiality: The answers provided by the calculator are free of bias. The company creating the calculator has no other interest than creating a calculator that gives you correct answers and those answers are impartial. With AI this is demonstrably not the case.

- Use-case unawareness: The calculator does not care, what you use it for. Are you calculating the price for a wedding or the trajectory of a rocket experiment? The calculator does not care about this context. On the other hand, the usage of AI products needs to be analyzed, to make them viable. The things you put into the AI will at some point be used, be it for training, market research or some other nefarious reasons.

AI can be a tool, but the products we see offered today are not tools.5 They are personal data-collection monsters. They claim that they can do everything, when in reality, their only use-case is the penetration of private life and collection of your data.

Take Over a Life

But why just interact with a user and try to get their private data by letting them tell you about it? The next step in this pipeline to push AI into every part of our life are “AI agents”. The idea is simple. Instead of just interacting via text, why shouldn’t the AI be allowed to control our computer and do tasks for us. While some describe this as a promise for “a new wave of productivity and innovation” some (aka. me) consider this the next step of making people dependent on a product of technological oligarchy.

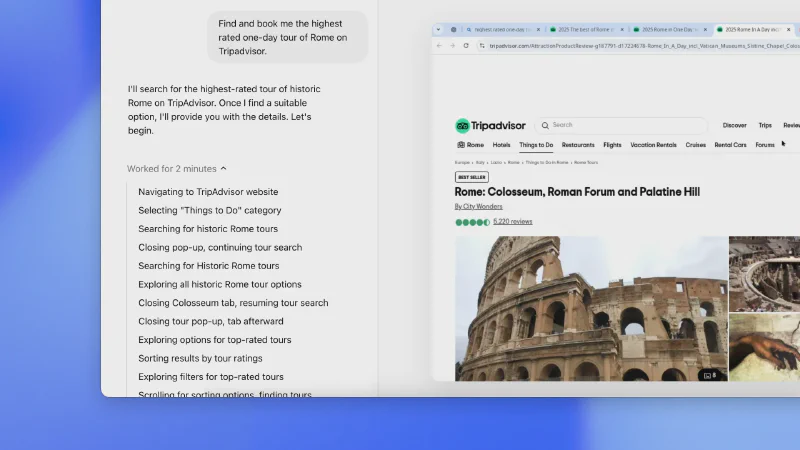

The selling example OpenAI came up for their “Operator” was to show it book you a tour through Rome on Tripadvisor.

Inside me there are two wolves, one who is impressed that any software can do this autonomously, the other that thinks: “Holy shit, this is lame.”. I find this example truly perplexing as I believe it to be a uniquely bad sales pitch.

- Anybody can do this without much effort.

- The AI cannot be aware of the user’s preferences.6

So, what is this about? Efficiency? The screenshot itself said that it took two whole minutes just to find the highest rated tour. What is the point? Why would I pay for this? This starkly reminds me of assistants like Alexa, that really have no useful functionality built into them. Oh, you can tell me the weather? Wow, cool! There are about 1000 apps on every smartphone that can do this.

So once again, big tech is presenting us with technology that has no real use and makes no rational sense to use. Well, it might not make sense for us, but it does for the company providing it. How does this technology even work? According to OpenAI:

Operator can “see” (through screenshots) and “interact” (using all the actions a mouse and keyboard allow) with a browser, enabling it to take action on the web without requiring custom API integrations.

Oh, look! It’s another screen grabber! OpenAI’s operator will stand behind me, watching over my shoulder, with an attentive stare at my screen. It will see everything. With whom and about what I am communicating. I will become the perfect transparent customer, and in that, cease to be a customer and become the product itself, letting big tech in on all of my privacy. However, obviously OpenAI has thought of these things:

Training opt out: Turning off ‘Improve the model for everyone’ in ChatGPT settings means data in Operator will also not be used to train our models.

That’s right. Training on your data, is opt-out and enabled by default!

This is the next step in getting more control over AI users. While we are already at a state where AI could be used to offensively manipulate users, and it is foreseeable that political bias will be infused into these models it cannot directly control your actions. This changes once AI agents are mainstream. The Deutsche Telekom already has plans to create a phone that is exclusively controlled by an AI agent. To me, it is only a matter of time until we see “AI agent only” laptops sold for cheap (much like Chromebooks) to try to force users into a specific ecosystem (again, much like Chromebooks).

These systems will not just know everything about you and your life, but they will make decisions for you. The restaurants you go to, the people you meet, the political party you vote for; all perceivable, all influenceable, all controllable.

I am eagerly awaiting the news-story that describes an AI agent going rogue, hallucinating that its user is a murderer and reporting them to the police. I am also awaiting the first time an AI agent messes up so badly on the stock market, that a few hedge-funds go bust or a new global financial crisis is kicked off. Only that I don’t await that one so eagerly. What I am truly anxious about is the approaching reality of living a society that relies on these agents to do almost every cognitive task, much like I was relying on my calculator to calculate some silly fractions.

The Great Filter

Products like ChatGPT, Copilot and DeepSeek are recommendation algorithms on steroids. They have scanned everything. They have seen everything, and they only tell you about the things they want you to know. Interacting with AI is to interact with a filter. A filter that is not in your control.

Trusting Distortions

The survey “The Impact of Generative AI on Critical Thinking: Self-Reported Reductions in Cognitive Effort and Confidence Effects From a Survey of Knowledge Workers” comes to the conclusion that, in knowledge workers, higher perceived trust in AI correlates with less critical thinking. This might not sound entirely surprising. After all, when somebody trusts AI to do the right thing, why would they fact-check

Are AI products even viable if the users don’t fully trust them? If I tell OpenAI’s Operator to book me a flight, I have to trust it. Since I don’t, I am not using the product and the reason why I don’t trust AI is that I have seen the many problems that come with the usage of these language models. But what about the younger generations?

The generation that is being born right now will not know a time when technology wasn’t drenched with this AI bullshit. They will use AI in schools, they will use it in their private life, they will use it on every device they own. They will trust AI to do the right thing, just like my generation trusted search-engines to do a good job.

What will become of critical thinking in this case? What will be the effect when a mass-rollout of this technology has happened? The last time we have seen a technology penetrate our lives this way, was the massive adoption of social media networks. I don’t need to make the point how disastrous social media was for mental health and politics as that is a well-known fact.

However, we need to extrapolate what the results of a global, uncritical and unrestricted usage of AI tools might be. The risk for mass manipulation on an unprecedented level should be evident. In this post-truth society, a lying chatbot is just another normality, but this time, the lies can be generated by a powerful, tech-feudalistic cabal. Make no mistake. This is intentional. The fact that a former NSA director is on the executive board of OpenAI isn’t a coincidence.

Seeping Into Reality

Even when you are not using AI, perceiving products of generative AI will be inevitable. E-mails, articles, social media content will all be partially written with AI. Text messages you receive from your friends, will have been composed by an AI agent. The designs you see, the music you hear, the movies you watch, will all have some generative aspect.

This stuff is not dystopian. It is reality. You are currently experiencing it. It is only a matter of time until your whole world will have AI elements in it. Your behavior will be scanned, analyzed and mangled to later serve tailor-made propaganda to you.

The people ruling over you and the world you inhibit will rely on this technology. The dumb fucks in the American administration seem to when calculating tariffs. The world as we know it, filled with autonomous human beings, will cease to exist and be replaced by humans that interact through the medium of AI agents. Free thought will become a relic of the past and filtered thought will replace it.

AI seems to be somewhat impartial right now, but this won’t be for too long. While writing this article Facebook has already announced that its Llama 4 model will be made to have a bias for right politics. Soon, when asking the AI on politics, news, history or common-sense, certain information will be omitted, certain facts will be changed, certain connotations will be implied. In one or two years, will ChatGPT tell us who Mahmoud Khalil is? Will it remember who Edward Snowden or Chelsea Manning are? Which sources will it use and which sources will it discard?

AI will decide who lives and dies. Sam Altman’s OpenAI is partnering with defense technology companies and his buddy Peter Thiel’s Palantir is working on an AI truck that is advertised as “Reducing Soldier workflow burdens and cognitive load” and boasting with “Accurate and effective targeting enabled by AI/ML”. AI can’t tell you how many “r"s there are in the word “Strawberry”, but it can shoot you dead. The companies, that leave human life in the mercy of AI, do not just want to create a helpful chatbot or everyday helper. They want AI to control every aspect of human life and death.

They want to be gods.

What Will Be Left?

I don’t want to read AI generated articles, with AI narration and AI summaries attached to it. I don’t want to listen to music that was composed and generated by AI. I don’t want to watch a movie which had AI write its script, create the screenplay, decide the cinematography and generate the actual material I am looking at. If we leave our culture in the hands of AI, we have a distorted emotional connection.

I don’t want to live in a society where everyone around me is offloading their own cognitive abilities to a machine, that is in the control of private capital. I don’t want to discuss politics with people that willfully opt-in to propaganda and surveillance. I don’t want to talk to people who can’t think critically. I don’t want to see this society crumble because people cannot communicate with each other anymore. If we leave our society in the hands of AI, we live in a distorted society.

If we let AI seep into our lives, we will end up trapped in our own shells, no ghost to be found inside, it existing somewhere else, being controlled by techno-feudal overlords. The surrounding reality will be shaped however they see fit. Our emotions, connections and decisions will not be ours.

A Way Out?

This is a bit of a doomerish post, isn’t it? Yeah, so let’s have some positive thoughts and plan ahead. What do we do now? How can we avoid catastrophe?

An obvious, immediate action you can take is to stop the usage of AI on your devices. Refuse to use the garbage that you being coerced to use. Never opt in to any data collections. Switch to apps that don’t force AI upon you. Use freedom-guaranteeing operating-systems on your devices. Don’t use any smart devices.

Once you are free of AI it’s time to start telling other people to do so. Tell them about the dangers; tell them about the inhumanity; tell them about the things they will lose and destroy. If you are a teacher, don’t advertise AI to your students; tell them not to use it! If you are a software developer, don’t tell people to just use Copilot to write their code; be critical of their lack of professional skills and tell it to their faces. If you read AI generated articles; shame the publication. If you are being sent AI generated replies in your personal chats; call people out on it and shame them for not taking communication with you seriously. Whoever want’s to introduce a distortion into your world should be prepared to face backlash from you.

We, as a society, have to collectively come together and reject an artificial world.

-

Eventually, I learned how to work with fractions and I even passed my Calculus exam after many tries. ↩︎

-

Also, the implementation of Recall is completely insecure. Your data is stored unencrypted and can be extracted easily. Which underpaid intern designed this?! ↩︎

-

How is this stuff even legal? Is it even legal? If an AI bot gives me advice that results in harm, who is to blame? ↩︎

-

Whenever any company is claiming that you need to accept something from them to get the “best experience” you always have to assume that they are gaslighting you into giving up your rights. If they cannot give you the “best experience” upfront, you shouldn’t use their products. ↩︎

-

I could make the point that using AI as a creative/writing tool in itself is awful as you are substituting human expression and creativity for algorithmic slop. In fact, I have made this case before, and you can read my old post on it here. I also recommend this short explanation: “On the use of so-called ‘Generative AI’”. ↩︎

-

Who would choose a tour just solely based on it’s rating? Aren’t people usually interested in certain aspects of a historical city? Who is the target audience here? People who don’t actually care and just want to tell their peers that they went to Rome? Well, yeah, makes sense. Those kinds of people probably also ask ChatGPT what they should have for dinner. ↩︎